M5 Competition Virtual Awards Ceremony

Notes

Notes from the M5 Forecasting Competition keynote speakers.

The M5 Forecasting Competiton recently finished, this past Jun 2020. On the 29th of Oct, M Open Forecasting Center (MOFC) held a virtual awards ceremony. I jotted down some notes for myself from the key note speakers.

Key Note Speakers

Spyros Makridakis / Founder

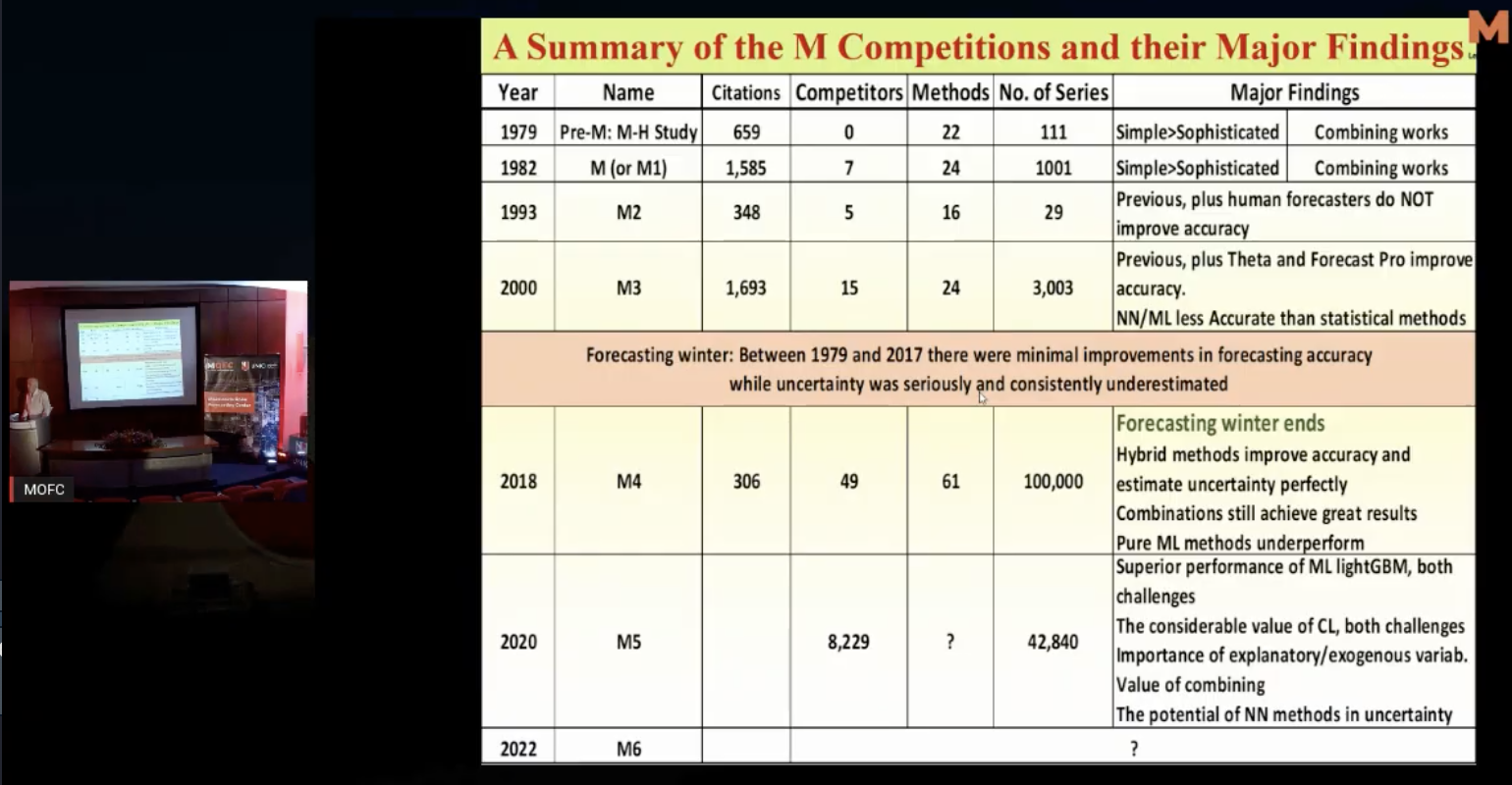

History of forecasting competitions

- Initial forecasting work focused on simpler statistical models and valued human judgement (albeit with minimal improvement in forecasting accuracy). The participants for the first many forecasting competitions were largely statistians and the like.

- M3 competition was huge push forward, ~10% accuracy improvement

- M4 onwards, lots more machine learning solutions

M5 Competition

- Best method is 22.4% more accurate than best statistical benchmark

- Higher levels of hierarchy show better improvement than lower levels

- Middle of CI distribution shows most improvement; marginal/worse at tails

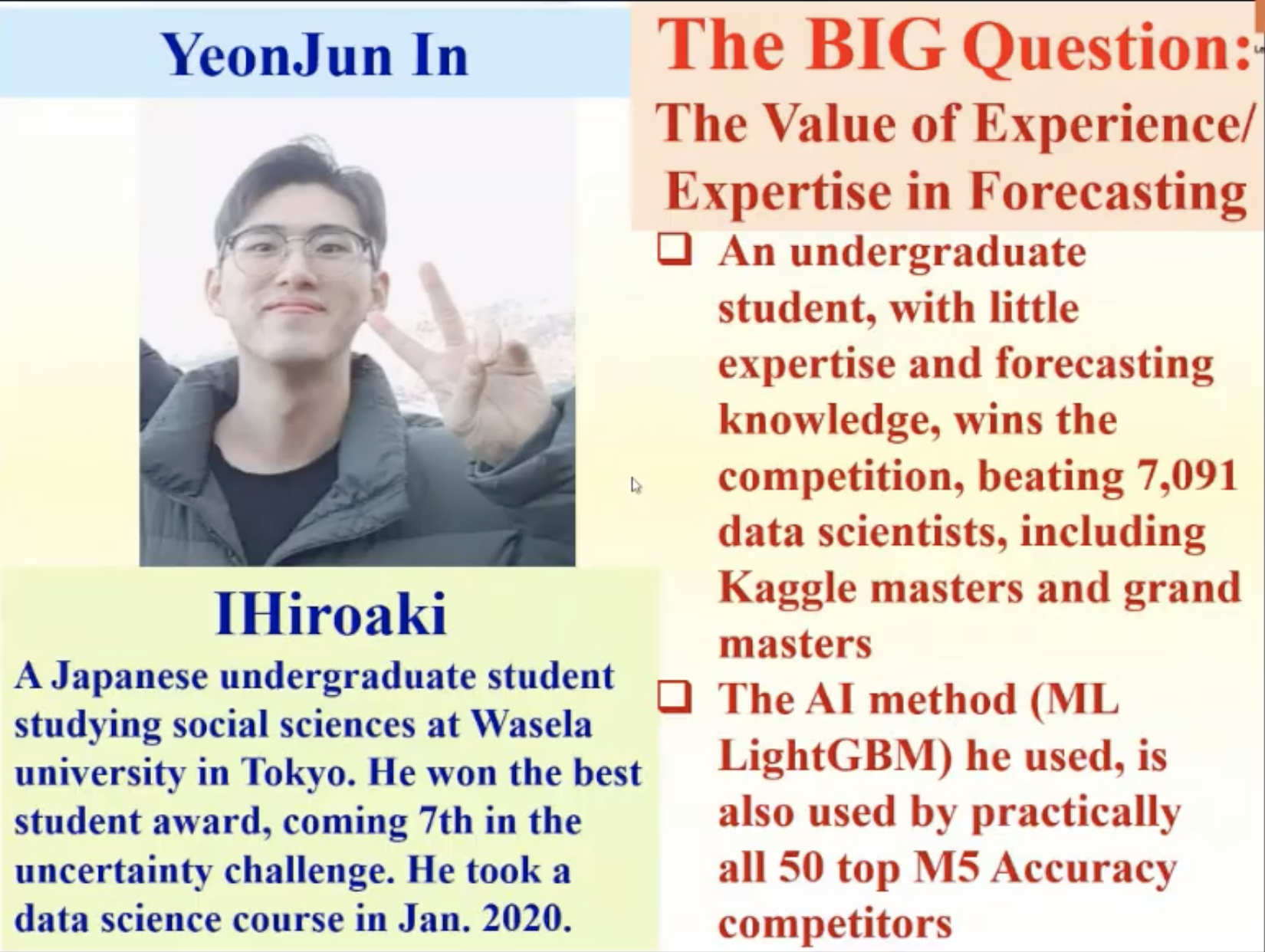

What is the value of experience?

- Winner is an undergraduate student, has no experience in forecasting, and little/no experience in data science!

- Winner beat 7000+ masters & grand masters!

- Winning method

LightGBMused by top 50 competitors - Evidence that understanding the computational algorithms instead of forecasting itself is paying off

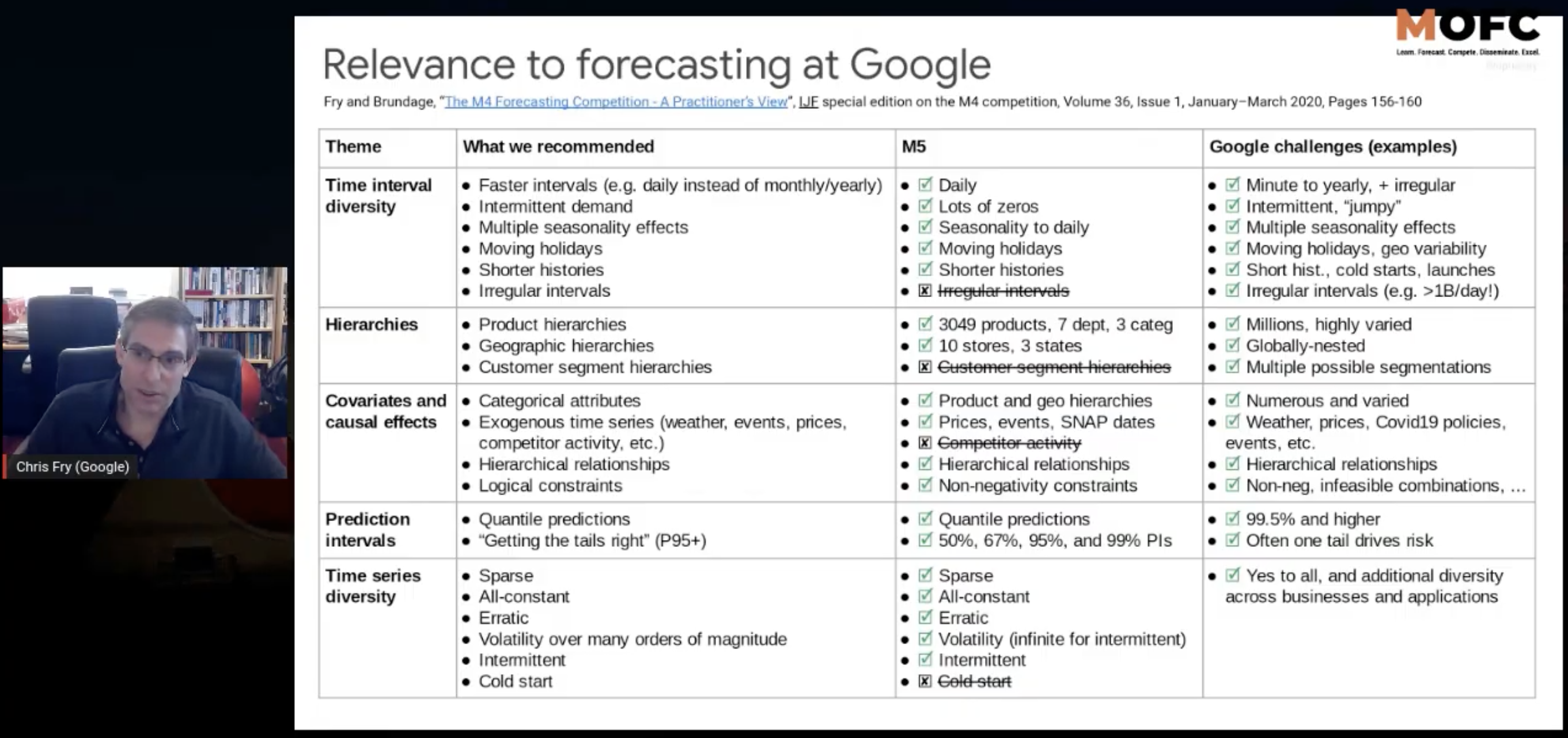

Chris Fry / Google

- Chris got involved by analyzing M4, it’s applicability to real world data sets. His critique and subsequent issues addressed in the M5 competition:

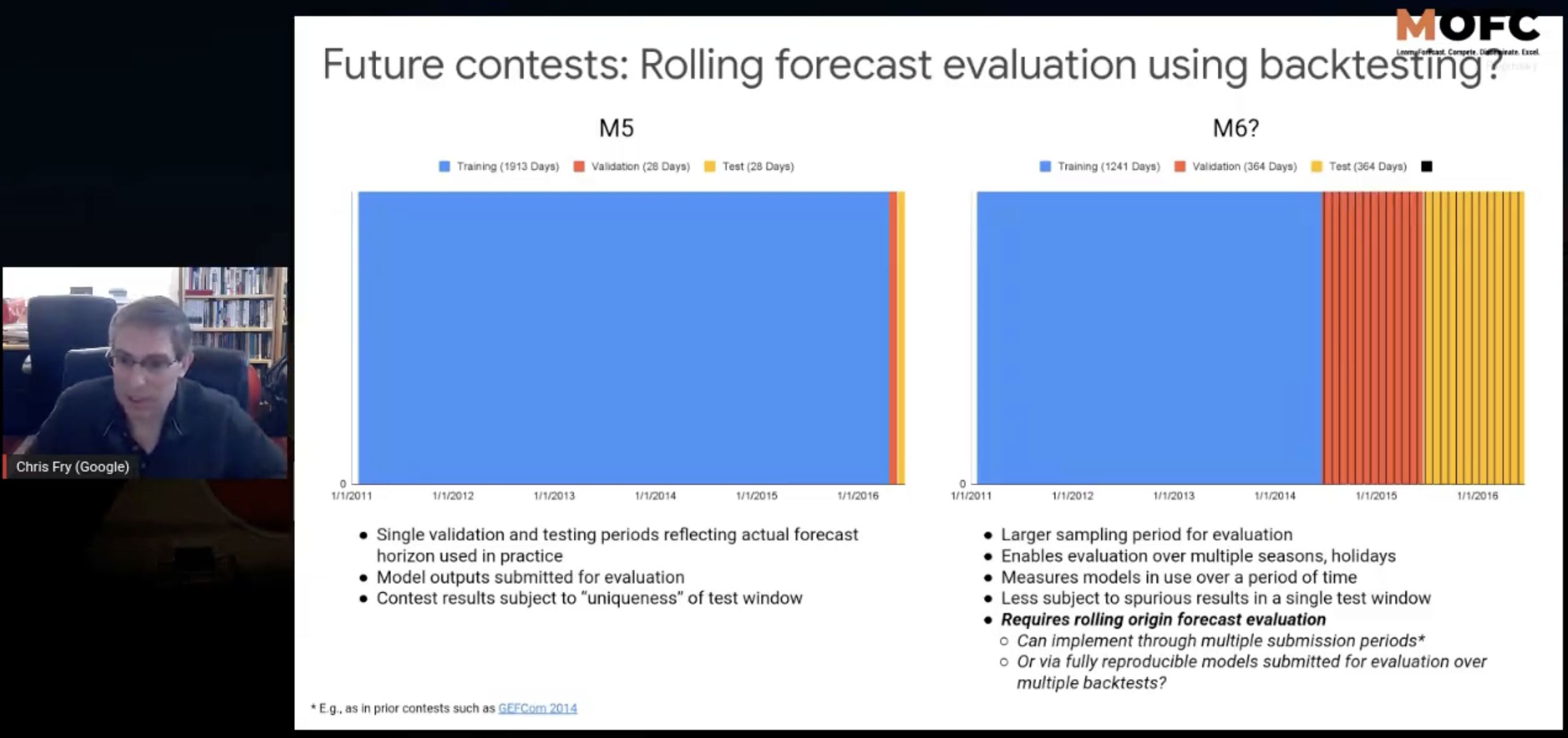

- Spoke of the challenge of single time-window submission:

- Short period of 28 days

- Results are subject to ‘uniqueness’ of test window

- @google, they do something a bit different:

- large sample for evaluation

- year long window will cover all holidays & other events

- should provide better stability in measurement of algorithm performance

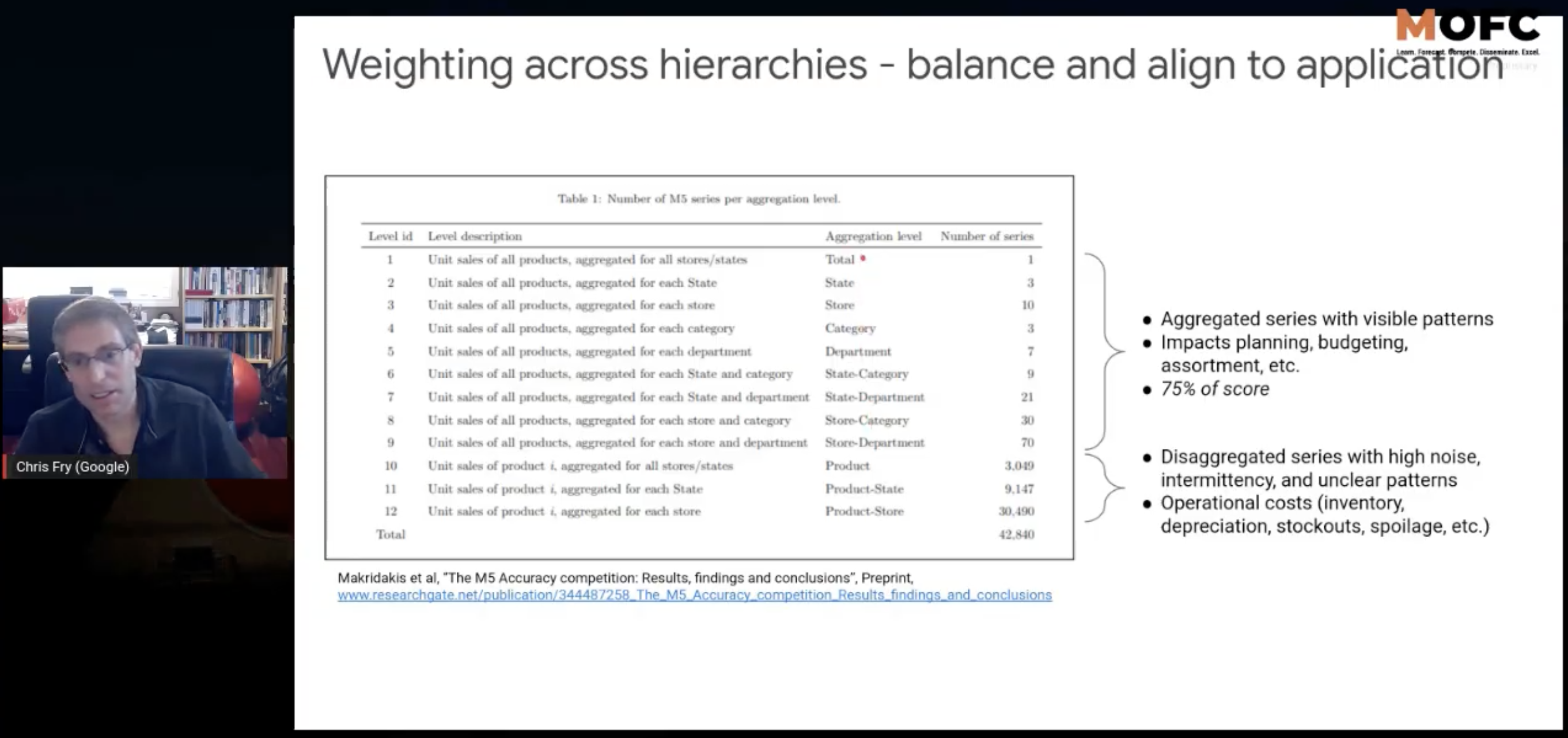

- 75% of score is across top 9 levels. Bottom three are disaggregated intermittent noizy series.

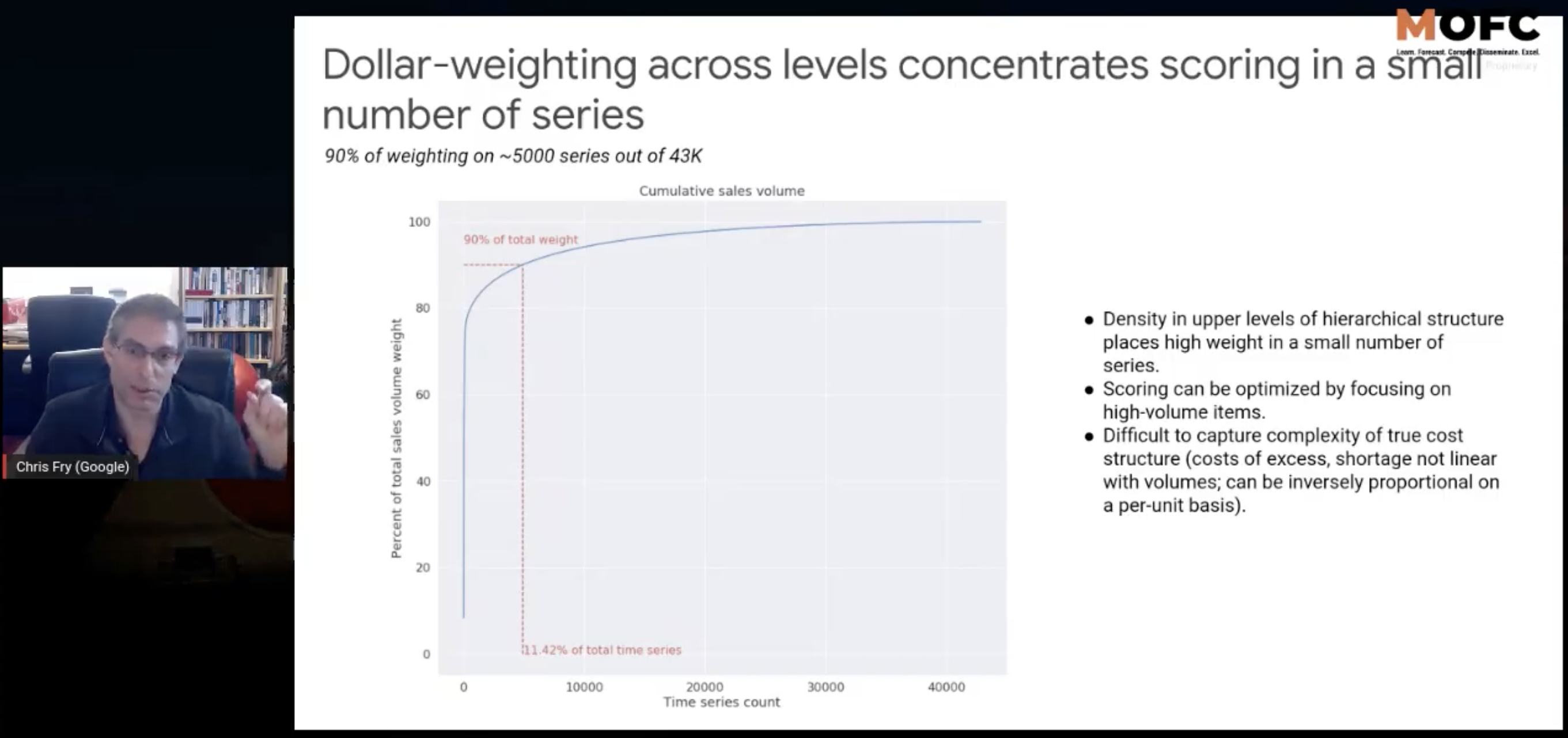

- Price weighting : 90% of the weight came from 11% of the total series. Competiton winners can focus on left sliver and win… but businesses do care about the right (inventory, spoilage etc).

Brian Seaman / Walmart

Walmart sponsored the M5 competiton and offered their real world data for the competition.

- Why Walmart cares about forecasts?

- Inventory management, capacity planning, labor planning, financials, real estate, network capacity etc

- Walmart challenges

- 10k Stores x 100k Items, 2m associates!

- Varied - Top sellers, Long Tails, Seasonal/Steady series

- New stores - cold start challenges

- Opportunities

- Scaling & better reliability by relying on Cloud based solutions

- Develop relationships (product hierarchies), (product obsoluetlece, superceding)

- Events like holidays

- M5 competition

- Tried to keep datasets large without getting overwhelming

- Intermittent demand, short histories and discontinued items; but no cold-starts since you need to develop some relationship models to previous like-items

- Item relationships - product taxonomy + geography

- Others - Events, pricing, weather

Addison Howard / Kaggle

- Overview of how Kaggle works

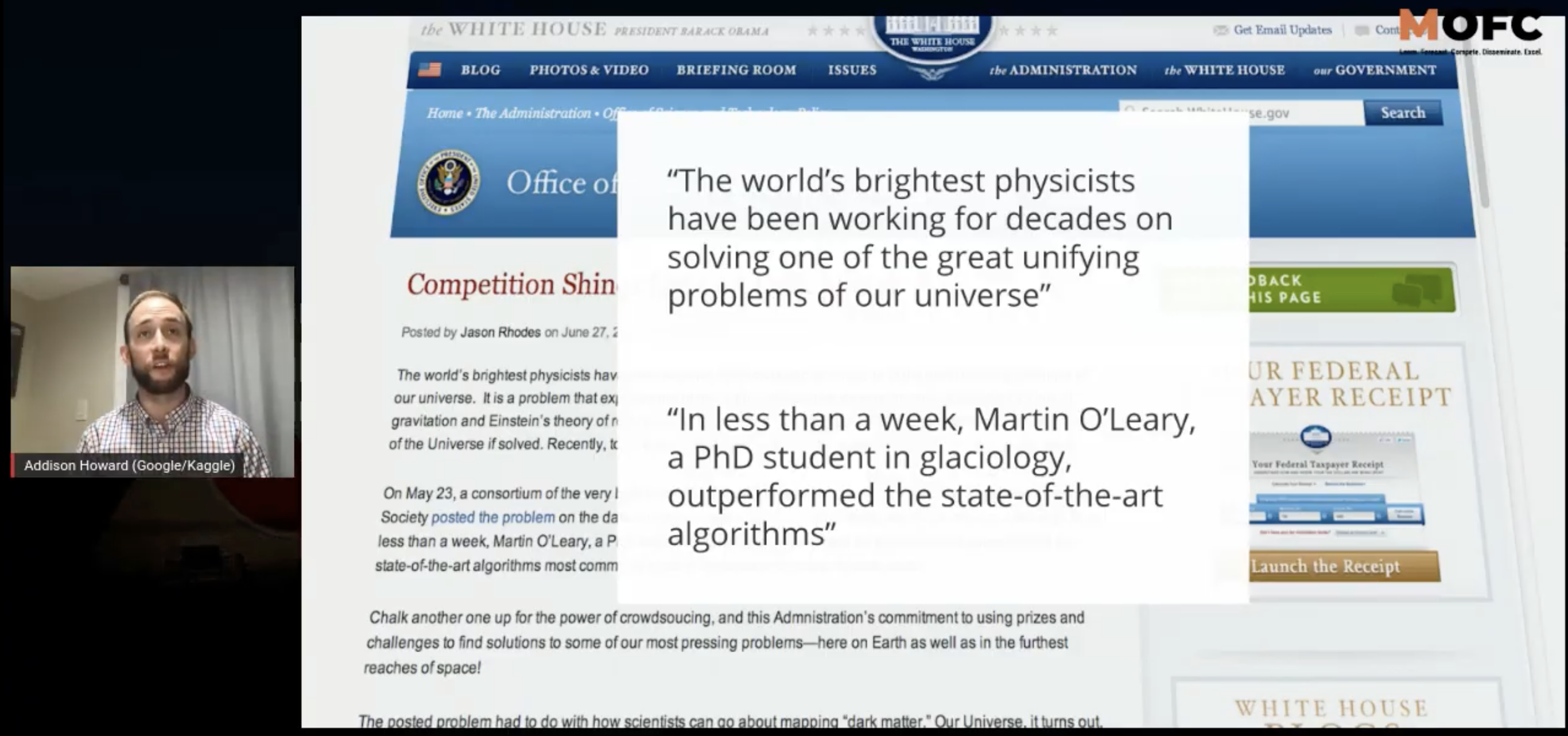

- Balance of expertize vs industry specific knowledge?

- Yet again, Addison spoke about the need for expertize in a domain vs computational prowess given the development in AI/ML.

- This as a very eye-opening revalition!

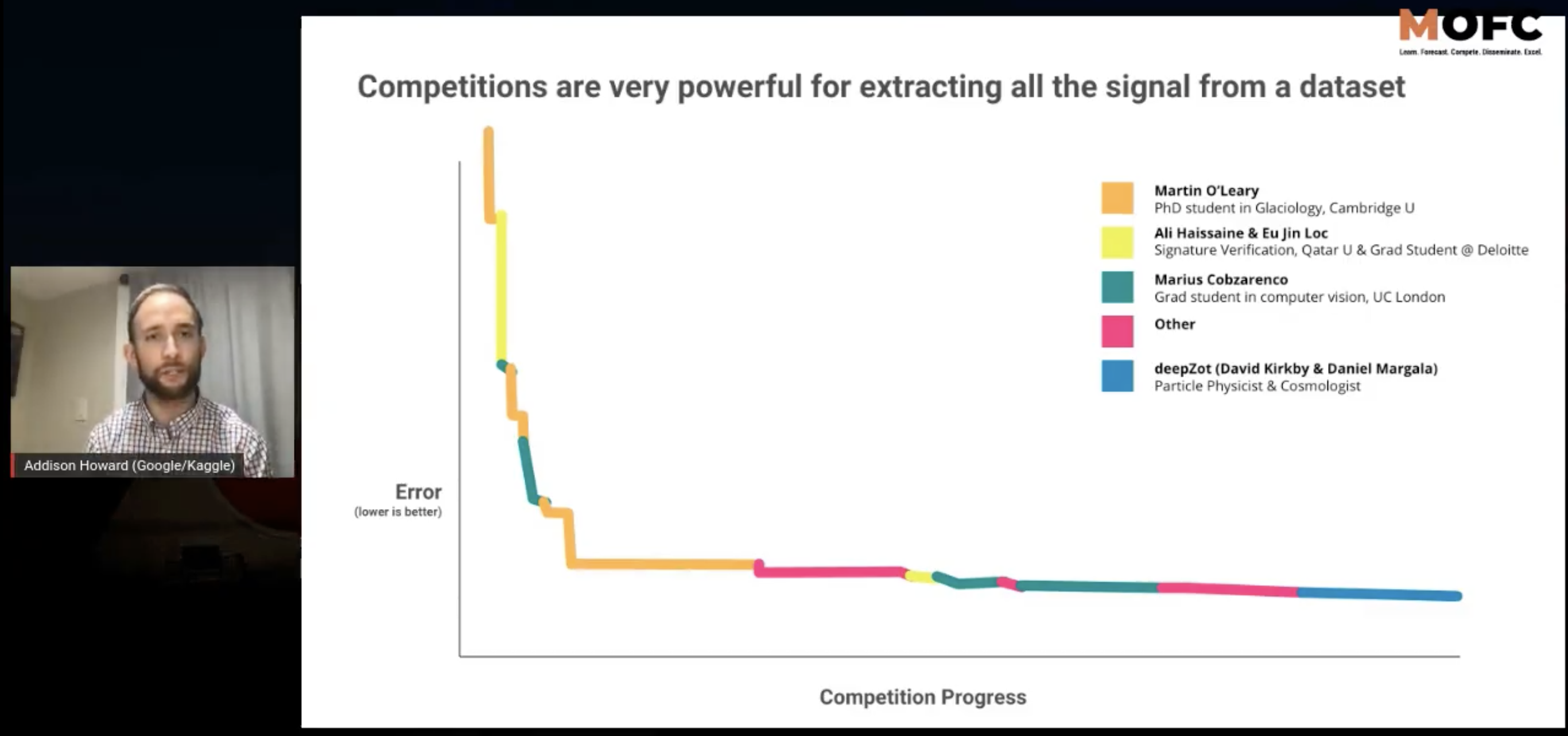

- He spoke about how competitions are an excellent platform for pushing our collective wisdom to solve complex problems. Look at these error bars for the same problem Martin solved, when picked up by teams!

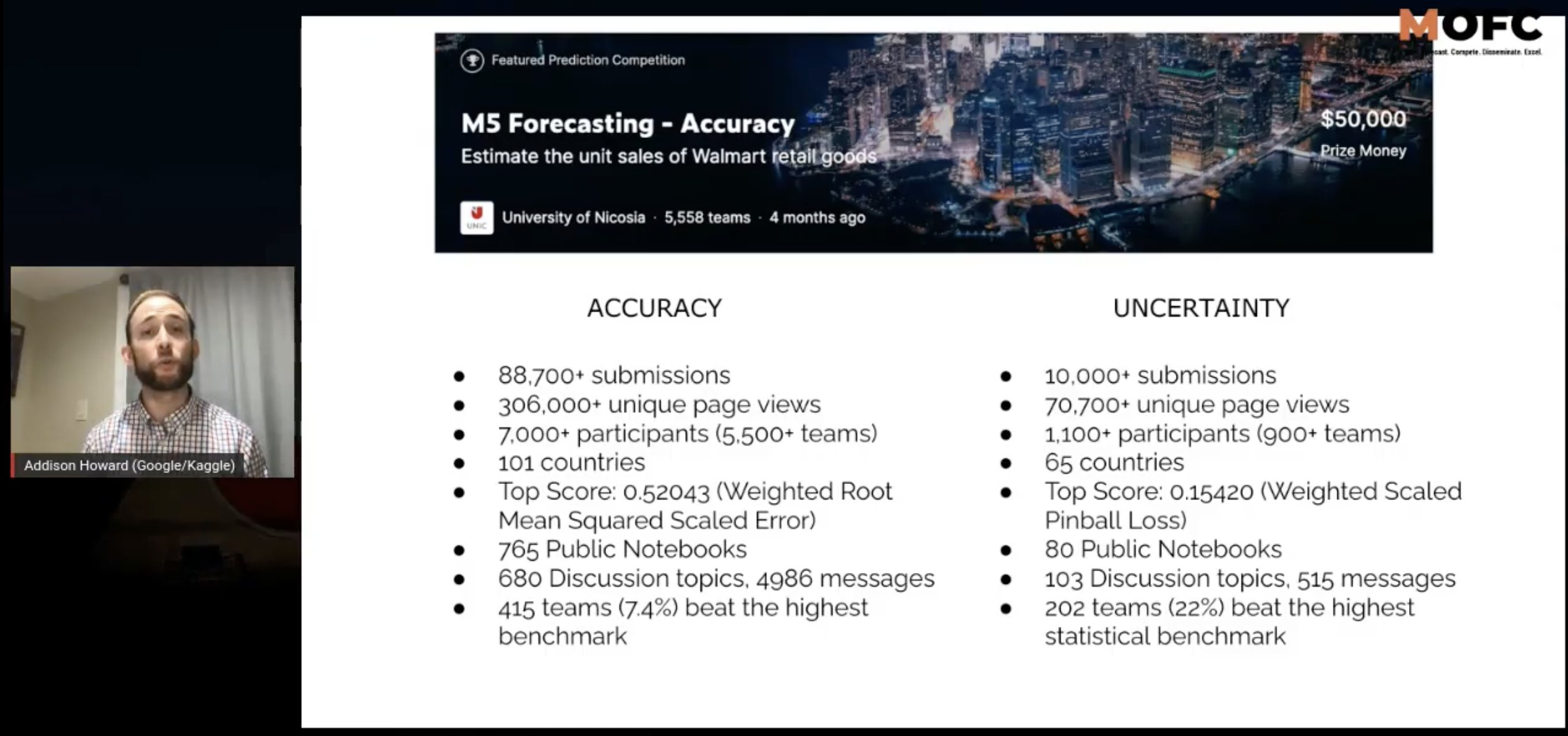

- Some insights into the M5 competition:

- Some insights into the M5 competition:

Len Tashman / Editor of Foresight

Comments about takeaways of M5:

- Good

- M5 was based on real data

- Data were hierarchical, and dirty (intermittent, noisy)

- ML is proving extremely valuable

- But…

- ML methods are still quite black-boxy. Business don’t inherently accept these models.

- Firms need to access how these methods improve their operational performance

- Spoke of firms which do not use any methodical forecasting at all. How can they be brought into the fold?

- How can we find the balance between traditional statistical approaches and these new ML approches in this field?